Over the last decade or so, ‘invertebrate cognition’ has become a respectable phrase. Some extraordinary new experiments and theoretical discoveries about the capacities of tiny nervous systems have forced us to recognize that many or most invertebrates possess behavioral and cognitive repertoires that rival those of big-brained vertebrates. One might wonder what lessons this realization holds for the philosophy of mind. I recently had the pleasure of speaking to the students of Walter Ott’s “Animal Minds” class at the University of Virginia on just this question (you can find my presentation here). Aside from an overview of the richness of invertebrate cognitive life, I defended two theses: (i) the variety of cognitive capacities exhibited by an organism depend on factors like modularity, not size; and (ii) the use of computer metaphors often obscures the fact that there are lots of ways (structurally and algorithmically) to implement learning.

Let’s start with the richness of invertebrate cognition. Most everyone has heard about the surprising capacities of octopuses to solve problems (like opening a screw-top jar to get at a yummy crab) or learning from observation. If you haven’t, then check out the videos listed at the bottom of this post right away. What has gone largely unnoticed is the extent to which similar abilities have been shown to manifest throughout the animal kingdom. My personal favorites are the insects. To a first approximation, all species of multicellular animals are insects. Their diversity of form and lifestyle are breathtaking. And so too, it turns out, are their cognitive capacities. Perhaps unsurprisingly, insects (honeybees and the fruit fly, Dropsophila for instance) are capable of operant learning. (That’s when an animal learns the relationship between it’s own actions and the attainment of a desirable state of affairs.) More surprisingly, crickets have been shown to learn from observation (Giurfa & Menzel, 2013). Specifically, they learned to hide under leaves in the presence of wolf spiders by watching their conspecifics do so. That’s definitely not a habit you can pick up through classical conditioning, since the negative reinforcement for making the wrong choice is death.

As fascinating as cricket behavior might be, honeybee cognition is where all the action is, at least so far as experiments have revealed. Honeybees are relatively big-brained for insects (they have nervous systems of about 1 million neurons). And they are capable of some very impressive things including categorization, concept formation, and processing multiple concepts at once. The first involves learning to treat discernible stimuli as the same. For instance, bees can be trained to classify images as ‘flowers’, ‘stems’, or ‘landscapes’ (Zhang et al, 2004). Once they’ve learned the categories, they will reliably group brand new images they’ve never encountered before into the right category. So-called ‘concept formation’ involves classifying things on the basis of relational properties that are independent of the physical nature of the stimulus. Bees can learn relations such as ‘sameness/difference’, ‘above/below’, and ‘different from’. Honeybees can even handle two of these concepts at once, choosing, for instance, the thing which is both above and to the right (Giurfa & Menzel, 2013). But that’s not the end of it. Honeybees communicate symbolically (via the famous ‘waggle dance’), build complex spatial maps that let them dead-reckon their way home, and can count up to four (Chitka & Niven, 2009; Dacke & Srinivason, 2008). That last one is particularly striking.

What I find even more incredible, however, is just how simple a nervous system can be and yet exhibit rich learning capacities. Even the lowly round worm, Caenorhabditis elegans, with its meager 302 neurons, is capable of learning by classical conditioning, differential conditioning, context conditioning, and more (Rankin, 2004). For a rich overview of the current state of the art regarding the full range of studied invertebrate—from slugs to bugs—check out the book Invertebrate Learning and Memory edited by Menzel and Benjamin.

Of course, all of this rich learning behavior exhibited by animals with modest nervous systems already suggests there’s something wrong with the presumed association between brain size and cognitive repertoire. But it’s only suggestive. An explicit argument can found, however, in the excellent review article by Chittka and Niven (2009), entitled “Are bigger brains better?” In short, the exhilarating answer they defend is “no.” They point to two reasons to believe that small nervous systems are capable of the same breadth and diversity of cognitive functions as big brains. The first is the sort of behaviors actually observed in small-brained animals, some of which I described above. The second is theoretical work showing that shockingly small neural nets suffice for some complex tasks. They point, for instance, to studies showing that 12 neurons suffices for simple categorization tasks, while something in the ballpark of 500 are needed for basic numerical abilities—a network of about 500 neurons can parse a scene into discrete objects and count them. Why then do we find so many big brains? If they don’t significantly increase your behavioural repertoire, what do they get you? Here again the answer Chittka and Niven give is twofold. On the one hand, bigger bodies with more muscles requiring innervation require more neurons to run them. Second, increasing the size of particular neuronal modules can increase the associated capacity. Your visual acuity is enormously better than that of a honeybee, and your long-term memory is vastly superior to that of the slug. In short, big brains don’t bring a new kind of cognition, just more and better versions of the same. I find the case compelling, but I encourage the reader to assess the paper for himself.

As an aside, there’s more than one way a brain can be small. On the one hand, you might reduce the number of neurons, as in C. elegans. On the other, you might reduce the size of the neurons. There is currently a sub-field of neurobiology that considers the ways in which nervous systems have been miniaturized. I won’t say much about this except to share an example that completely blows my mind every time I look at it. Here’s a figure from Niven & Farris (2012):

![Extreme reduction in body size in an insect. (A) SEM of an adult Megaphragma mymarip- enne parasitic wasp. (B). The protozoan Para- mecium caudatum for comparison. The scale bar is 200 mm. Adapted from [5].](https://www.ratiocination.org/wp-content/uploads/2014/11/TinyWasp-300x262.png)

Extreme reduction in body size in an insect.

(A) SEM of an adult Megaphragma mymarip-

enne parasitic wasp. (B). The protozoan Para-

mecium caudatum for comparison. The scale

bar is 200 um. Adapted from [5].

Finally, what about those ubiquitous computer metaphors? I said at the beginning that we are often led astray when we speak of brains in terms of computers. Specifically, we tend to assume that a given input-output relation exhibited by a particular organism in a learning task is realized by an algorithm similar to that which we would choose to accomplish the task. Furthermore, we often assume that the algorithm will be implemented in structures closely analogous to the kinds of computational devices we are used to working with, in other words, computers with a Von Neumann architecture. I suggest that both of these assumptions are suspect.

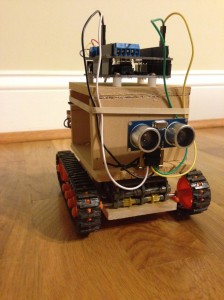

To make the point for the students of Prof. Ott’s class, I asked them to think about solving a maze. What sort of brain would we have to give a robot that can solve a modestly complex maze? Think about the tasks it would need to accomplish. It needs to control at least a couple of motors in order to propel itself and change direction. It needs some way to process sensory information in order to know when it is approaching a wall. For efficiency, it would be great if it had some sort of memory or built an explicit representation of the maze as it went along. If you want the robot to perform robustly, even more is required. It has to be able to get unstuck should it get jammed in a corner, it has to be able to detect when it has capsized and execute a procedure to right itself, and so on.

All of these consideration might lead you to believe that you need something like this:

This is a robot I’ve been building with my children. There’s some debate about its name (Drivey versus Runny). The salient point is that it’s brain is an Arduino Uno, a hobbyist board built around the ATmega328. That’s a microcontroller with about 32 kilobytes of programmable memory. Some clever young inventors have put exactly this board to use in solving mazes, uploading programs that accomplish many or most of the tasks I mentioned above. Here’s one example:

With these requirements for a maze solver in mind, I then performed the following demonstration (the video is not actually from the class at UVa – that’s my son’s hand inserting the robot in a home demonstration):

If you watched the above video, what you saw was a small toy (a Hexbug Nano) solve an ungainly Lego maze in a matter of seconds. More to the point, the toy has no brain. That is, there is no microcontroller, microprocessor, or even integrated circuit in the whole thing. The only moving part is a cell-phone vibrator. That brainless machine (which is really a random walker) can right itself if it tips over, almost never gets stuck, and—as you can see—rapidly accomplishes the task that our computer metaphors led us to believe would require significant computing power. You might object that in some sense parts of the relevant algorithm are built into the shape and mechanical properties of the toy. And you’d be right. My point is that there is more than one way to accomplish a cognitive task, and far more than one way to implement an algorithm. Think about that the next time you watch a slug ambling across a garden leaf—it’s much smarter than you think.

References:

Chittka, Lars, and Jeremy Niven. 2009. “Are Bigger Brains Better?” Current Biology 19 (21): R995–1008. doi:10.1016/j.cub.2009.08.023.

Dacke, Marie, and Mandyam V. Srinivasan. 2008. “Evidence for Counting in Insects.” Animal Cognition 11 (4): 683–89. doi:10.1007/s10071-008-0159-y.

Giurfa, Martin, and Randolf Menzel. 2013. “Cognitive Components of Insect Behavior.” In Invertebrate Learning and Memory. Burlington: Academic Press.

Menzel, Randolf, and Paul Benjamin. 2013. Invertebrate Learning and Memory. Burlington: Academic Press.

Niven, Jeremy E., and Sarah M. Farris. 2012. “Miniaturization of Nervous Systems and Neurons.” Current Biology 22 (9): R323–29. doi:10.1016/j.cub.2012.04.002.

Perry, Clint J, Andrew B Barron, and Ken Cheng. 2013. “Invertebrate Learning and Cognition: Relating Phenomena to Neural Substrate.” Wiley Interdisciplinary Reviews: Cognitive Science 4 (5): 561–82. doi:10.1002/wcs.1248.

Rankin, Catharine H. 2004. “Invertebrate Learning: What Can’t a Worm Learn?” Current Biology 14 (15): R617–18. doi:10.1016/j.cub.2004.07.044.

Roth, Gerhard. 2013. “Invertebrate Cognition and Intelligence.” In The Long Evolution of Brains and Minds, 107–15. Springer Netherlands. http://link.springer.com/chapter/10.1007/978-94-007-6259-6_8.

Zhang, Shaowu, Mandyam V. Srinivasan, Hong Zhu, and Jason Wong. 2004. “Grouping of Visual Objects by Honeybees.” Journal of Experimental Biology 207 (19): 3289–98. doi:10.1242/jeb.01155.